LMD (2008)

|

Music for Last Manoeuvres in the Dark ° Last Manoeuvres in the Dark (LMD) is an installation for SUPERDOME (may 29 – august 24 2008) at Palais de Tokyo (Paris) by Fabien Giraud and Raphael Siboni – Music by Robin Meier and Frédéric Voisin In early 2008, Robin Meier and Frédéric Voisin created an autonomous musical network learning from a database of a thousand musical fragments ranging from Fauré’s requiem to hardcore techno to compose the darkest tunes of all times. This generative music system consists of a cluster – or orchestra – of 300 microprocessors (agents) emulating behaviors observed in the biological world. A neural net runs on each agent which is capable of interacting with neighboring agents, learning from the musical database, and generating new musical material in real-time. This society of agents, without the aid of human interaction, generates a continually evolving mix tending towards a state of abyssal musical darkness by controlling a set of digital synthesizers and drum machines. The work on this system draws from multiple areas of research, most importantly short-term temporal structures in music, music information retrieval (MIR), automated harmonic analysis, and musical stylistic vocabulary. These aspects, along with a multitude of technical issues, were automated and integrated into a autonomous computer system capable of running for several months. audio examples of music generated by this computer-system a live recording from Palais de Tokyo (August 5 2008 12.57pm):

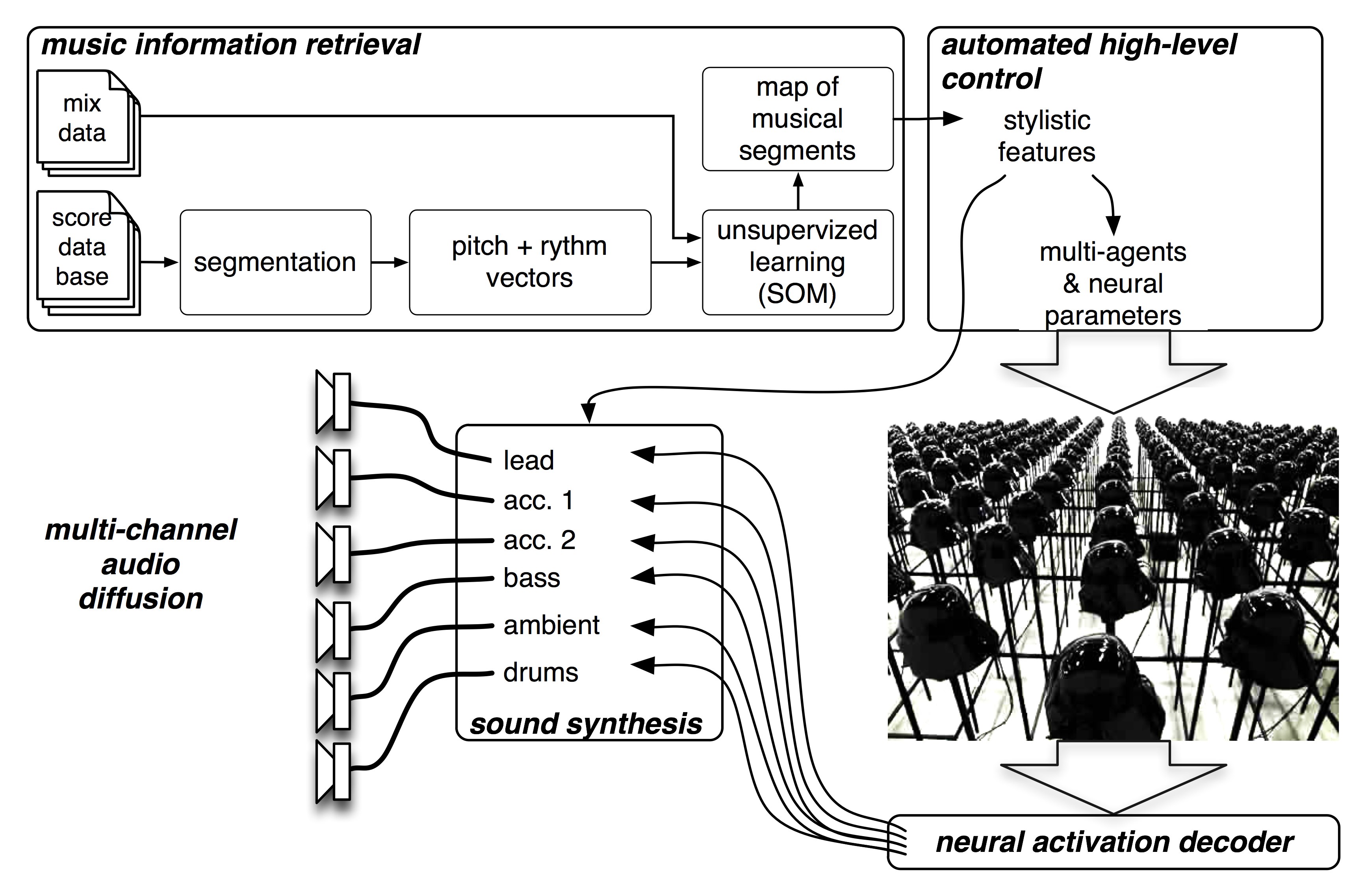

Melodic structures The part of the system that deals with the short-term musical structures (themes) is at the centre of the machine-generated music. For this, we adapted an extension of the classic artificial neural network architecture called Self-Organizing Map (SOM). This extension, called a Recursive Oscillatory Self-Organizing Map (ROSOM) is inspired by topological maps observed in the brain and by systems of coupled oscillators which are found frequently in nature (heart cells, neuronal oscillation, synchronizing fireflies). A ROSOM is not only capable of learning and categorizing musical material without human intervention (an unsupervised neural network), but also of analyzing and storing temporal sequences and detecting and reproducing periodicities (loops) in music. By tuning this complex network of artificial neurons and coupled oscillators to our needs, we were able to create a system that would generate continuous variations of given musical themes. These variations could be influenced by a variety of factors, most importantly by learning new musical themes, which would extend the knowledge and musical activity of our system, thereby creating richer and more varied sequences.

Other factors influencing the musical output included the activity of neighboring agents, internal learning rates, sensitivity to temporal features in contrast to melodic features and many more. All of these parameters provided us with a multitude of high-level controls to create new and expressive themes – the basic building blocks for our music. Music information retrieval There are myriad ways of representing music digitally, either as an acoustic signal (mp3 files for example) or as a symbolic score (MIDI / Music XML / etc). For LMD we opted for a symbolic approach, meaning that our agents manipulated scores (abstract representations of pitch, duration and velocity) which were then performed by a set of instruments, digital synthesizers in Native Instrument’s Reaktor application. These synths were compiled and created by Olivier Pasquet. The way in which musical information is represented is crucial for the actual musical output of a system. The representation defines the “perception” of our machines and thereby defines the parameters which are processed, varied and taken into account by them. For example, by choosing a score-based approach rather than an approach which captures the spectral content of the musical material, we consciously discard any information on the timbre and color of the musical themes being processed – leaving us the freedom to choose the instrumental timbres performing the newly generated score. Once we designed vectors which represented the scores in a way that produced interesting results from the agents, we applied this representation to the entire database of musical fragments. This database was the main “knowledge-pool” for the system from which it learned and tried to imitate. This core-part of the system defines the musical styles from which all new musical output is derived of. For a selected list of titles present in the database please refer to the end of this paper.

Harmonic and rhythmic analysis Once again we relied on the self-organizing characteristics of a SOM. Through the use of this algorithm we were able to have the machine automatically organize and categorize hundreds of musical fragments on a two dimensional map, based on their harmonic profile and any other parameter we might consider important for the combination of the different voices. Once this map was established, we could choose an arbitrary fragment for a bass-line for example and look up similar fragments for the lead or any other voice, thus ensuring coherence by similarity between voices. By enlarging the radius inside which new fragments may be chosen we could gradually adjust the amount of “tonal freedom” the agents have when playing together – ranging from homophony to “dodecaphonic freestyle”.

Even though this method relies more on topological similarity than on actual harmonic identity it remains very flexible and easy to use, especially due to the fact that the musical fragments are not necessarily limited to their harmonic profile (pitch class-histograms) but can also contain information on rhythmic structure, density, register amongst many other possible parameters. By choosing the right representation and weighing the different features of the musical fragments, this musical topology becomes the main theater for the agents and a versatile tool for defining high-level attributes for the resulting music. Stylistic vocabulary of darkness The main factor influencing musical style is the song-database. Its variety contains the melodic and rhythmic vocabulary from which the system learns. This keeps the generated music centered around a certain set of styles defined by the database. The second factor for style are the sounds – effects and synthesizers – used to play the computer-generated scores. For this we looked for a very evil electronic, deep and dark sound. The collaboration with several musicians in the french electronica scene such as Franck Rivoire / DANGER (Ekleroshock records) or Klement (Contre Coeur records) was a necessary and highly interesting phase of work: in order to quantify their personal and intuitive mixing techniques, such as varying compressors, reverbs, filters etc., we asked the musicians working with us to remix scores manually with the same set of synthesizers used for computer-generated mixes. During these mixing sessions, we would not only record the sound performed by the musician but also all the manipulations of synths, effects and mixers directly inside the computer. With this precise transcription of mixing-techniques and through the musician’s feedback we provided the system with a selection of techniques which were used for the real-time control of the synthesizers and effects. For LMD we chose a periodically evolving mix in which each cycle would last up to forty minutes. During this time the music starts off in an ambient style, generally dominated by long basses and intermittent bass drums with varying reverb. From here the mix slowly moves on to more denser melodic lines and varying motives to reach an alternating theme-refrain structure. Finally the loops get shorter, the sounds harder until we reach a soaring highly compressed hardcore sound which eventually gives place to the next texture announcing a new cycle. System Overview

Manually segmented songs and mix-data are processed and formatted for the score-database using shell-scripts and common-lisp programs. The cluster is made of 300 ARM-based embedded computers with 256Mb RAM each (Calao Systems). At boot time, each embedded computer runs a GNU-Linux operating system (Uclibc) which automatically runs shell scripts to instantiate a neuronal agent written in C (Java for betatesting). Python-scripts are used for UDP communication amongst agents and for receiving and sending cues and vectors. A Linux (Debian) computer runs a PureData process controlling the agents and routing neural activations via UDP for audio-synthesis. Six synthesizers built with Native Instrument’s Reaktor environment are loaded as VST-plugins inside MaxMSP (Cycling’74) on OS X. Max is used also for the conversion of the UDP messages to MIDI and controller data and for the multichannel sound-system (Meyer Sound). Live recordings were made using PureData on a separate linux machine with optional intra-/internet-streaming capabilities. Finally a third linux machine was used for maintenance through intra- and internet using VNC and SSH. Selected list of artists from the score database Alizée Credits Last Manoeuvres in the Dark Music and « system » design Programming Mixers |